Couchbase, Inc., a leading developer data platform for AI-driven applications, has integrated NVIDIA’s NIM microservices into its Capella AI model services to simplify and accelerate the deployment of AI-powered applications. This collaboration, part of NVIDIA’s AI Enterprise software platform, provides enterprises with a robust solution for securely running generative AI (GenAI) models while addressing privacy, scalability, and performance needs.

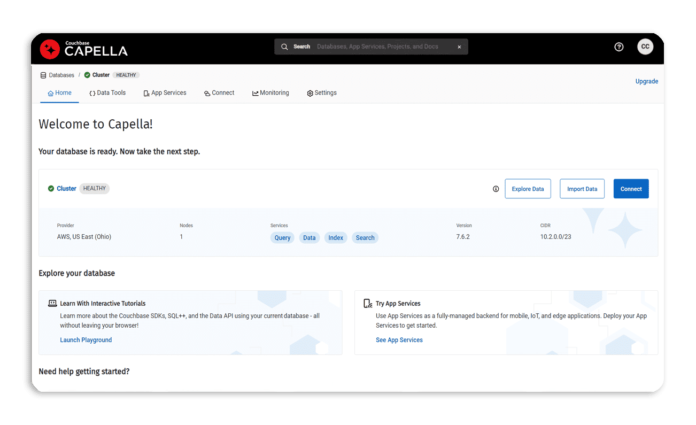

Capella AI Model Services, launched recently as part of Couchbase’s broader AI offerings, provide managed endpoints for large language models (LLMs) and embedding models, enabling companies to develop and deploy agentic applications with minimal latency and maximum performance. By colocating data and models, the solution improves efficiency and reliability, while enhancing AI capabilities like retrieval-augmented generation (RAG) for faster, more accurate responses.

“Enterprises need a unified data platform to support the entire AI lifecycle,” said Matt McDonough, SVP of product and partners at Couchbase. “By integrating NVIDIA NIM microservices, we enable businesses to run preferred AI models in a secure and governed way, ensuring seamless scalability and optimized performance for AI workloads.”

This partnership offers significant consumer and enterprise impact by reducing the complexity and cost of deploying AI solutions while ensuring compliance and privacy. With features such as semantic caching and real-time monitoring, Capella AI Services offer greater reliability and accuracy in AI-powered applications, mitigating risks like AI hallucinations and privacy violations.